Whether you’re formulating your startup’s first QA strategy or you’ve realized your existing strategy doesn’t support shipping fast, with quality, this post is for you.

In this piece, we focus on the practical frameworks and practices that’ll help you lay a strong foundation for quality assurance in your org. Among other things, you’ll learn:

Before we get into it: any successful QA strategy needs stakeholder support. This guide assumes you have buy-in from your software and management team that product quality is key to protecting the interests of your business, and that quality can’t improve without better QA processes.

To figure out what success looks like for your quality assurance initiative, it’s often useful to start by asking: why, specifically, did we decide to develop a quality assurance strategy?

Usually there’s some sort of precipitating event, like an embarrassing bug showing up during a sales demo, or a bunch of customers reporting an issue affecting an important workflow in your app.

Instead of giving your QA strategy a generic goal that’s difficult to quantify (e.g., “improve software product quality” or “make our product high-quality”), narrow it down to something that ties directly to the pain your team is feeling at this moment.

Select a goal that’s objectively measurable so you can track the effectiveness of your efforts. Often, this approach aligns with a goal like: Within [time period], eliminate high-priority bugs reported by customers.

Why “high-priority bugs” and not just “bugs”? Because bugs are an inevitable part of building software, and not all bugs are equally important. The effort required to try to eliminate all bugs isn’t compatible with shipping fast or frequently, so modern software teams have to prioritize.

Regardless, don’t stress about defining the perfect initial goal: just like any other goal, your QA goal can change as you learn and become more effective.

If you’re new to software quality assurance practices, looking at a list of types of QA tests can feel overwhelming.

The good news is you don’t have to worry about all these types of tests during your initial foray into quality assurance. Accessibility, usability, user experience, security, performance testing, and other types are important at scale, but when you’re a resource-constrained startup, you can apply them selectively.

An effective software QA strategy can include just three types of functional tests. These tests will get you to sufficient test coverage and capture the vast majority of important bugs: unit tests, end-to-end (E2E) tests, and exploratory tests.

An automated unit test evaluates a specific, narrow function in the code. Unit tests are incredibly fast and cheap to run, so there’s mostly upside to running them early and often in the development process. They’re very useful for catching issues soon after they’re introduced, when they’re the least expensive to fix.

E2E tests evaluate end-user workflows from one end to the other. “Workflows” include finite tasks like: log into the app, create a user account, and change the user password.

When we talk about automating tests in this piece, we’re talking about E2E tests. While automated E2E tests are fast and inexpensive relative to manual testing, they’re not as fast and cheap as unit tests, so they need to be run more strategically. (At a minimum: right before releasing to production.)

But whereas unit tests look at each function in a vacuum, E2E tests confirm that all those functions work well together. Since E2E tests encompass all the systems and functionalities that make user workflows possible, they technically cover API testing, integration testing, and functional testing. So you get three for the price of one.

E2E tests are literally “scripted” — they follow the same set of steps every time. But plenty of bugs only surface when users go off-script. That’s where the unscripted nature of exploratory testing comes in. Exploratory testers manually interact with an app in creative and unexpected ways to find non-obvious issues off the “happy paths” covered by your automated end-to-end tests.

In addition to its AI-powered, no-code test automation platform, Rainforest also offers exploratory testing services to catch bugs your automated test coverage might miss. Talk to us to learn more.

When it comes to manual vs. automated testing, your testing approach should include as much automation as possible, because it’s faster and cheaper. But that’s not to say you can use automation for everything in your testing process — manual testing is still a better option for some scenarios.

Here’s a rule of thumb for your end-to-end testing strategy: use automation when you’re repeatedly running the same tests on mostly-stable features. Regression testing — which is run upon every release to confirm new changes haven’t broken existing functionality — is the perfect use case for automation.

On the other hand, if you’re actively developing a new feature that’s still evolving, manual testing typically makes more sense. Automated tests would require near-constant updating to keep up with the changes in the feature. And automated test maintenance is expensive, even in most no-code tools.

Manual testers are also a better fit for:

There are four major ongoing tasks in the software testing workflow:

Back when open-source frameworks were the only option for test automation, technical skills would dictate who got assigned these tasks. For example, only engineers familiar with frameworks like Selenium or Cypress who could “speak” relevant programming languages could write or maintain automated test cases.

(In case you’re not familiar with automated testing: test maintenance is the ongoing work to update automated tests to make sure they reflect the latest, intended version of your app. Every time you update a notable feature in your app, you’ll probably have to update — or maintain — one or more tests so they continue to be useful.)

These testing tools forced companies to either (a) hire specialized testing teams of QA automation engineers to handle their automated test suites, and/or (b) have their existing software engineers handle these software testing duties. Both of these options come with significant financial or opportunity costs.

According to publicly-available salary data, hiring a QA engineer with a few years of experience in the U.S. will cost your startup more than $100 thousand per year.

You could save that money by having your existing (and expensive) software engineers handle automated test creation and maintenance. But that’s time taken away from what those contributors are incentivized to do and what your startup wants them to do: ship code.

And we’re not talking about an inconsequential amount of their time being consumed.

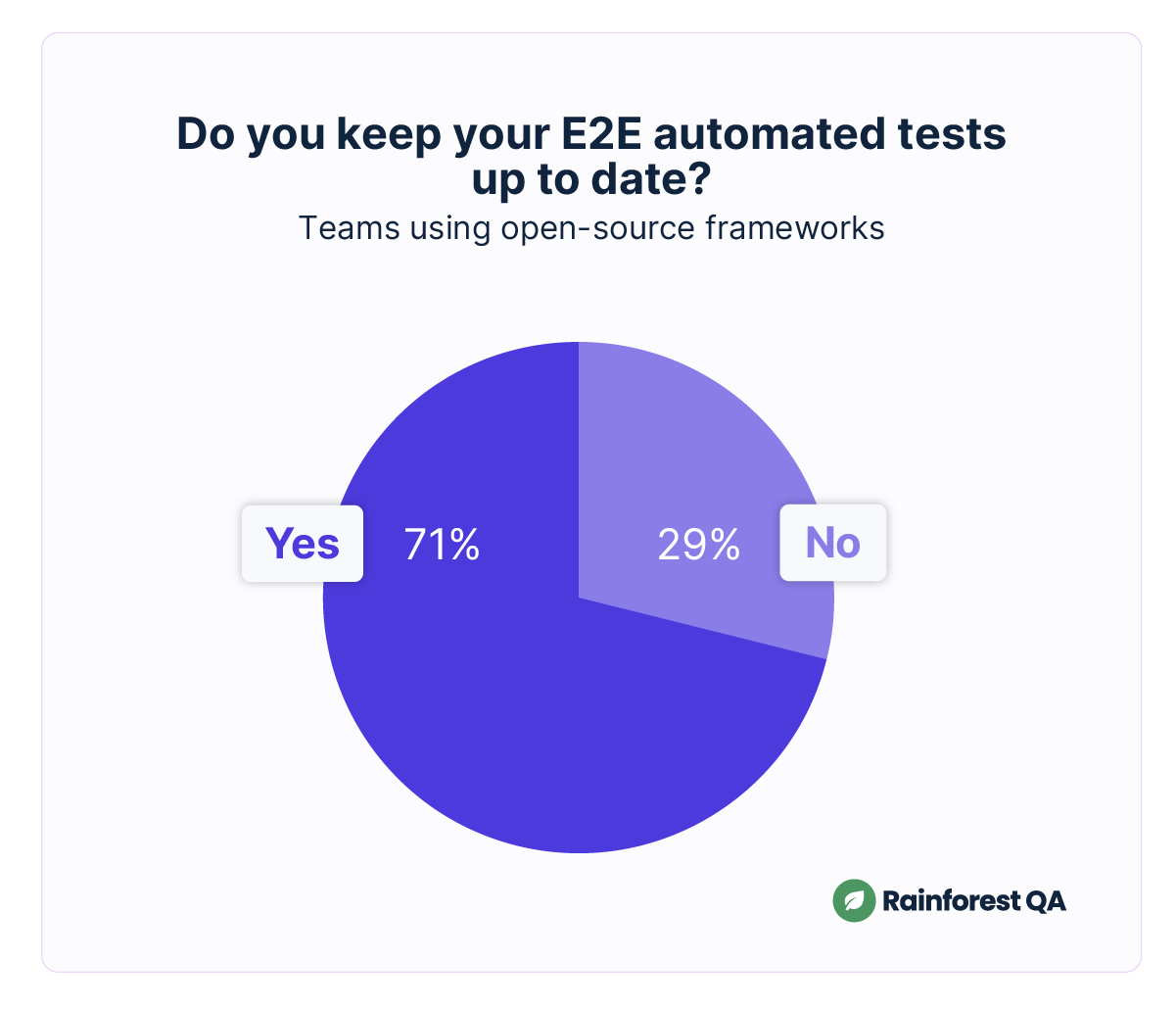

We ran a survey of 77 startup software teams (each with less than 200 headcount). A full 29 percent of them fail to keep their open-source automated test suites updated because of the requisite time commitments.

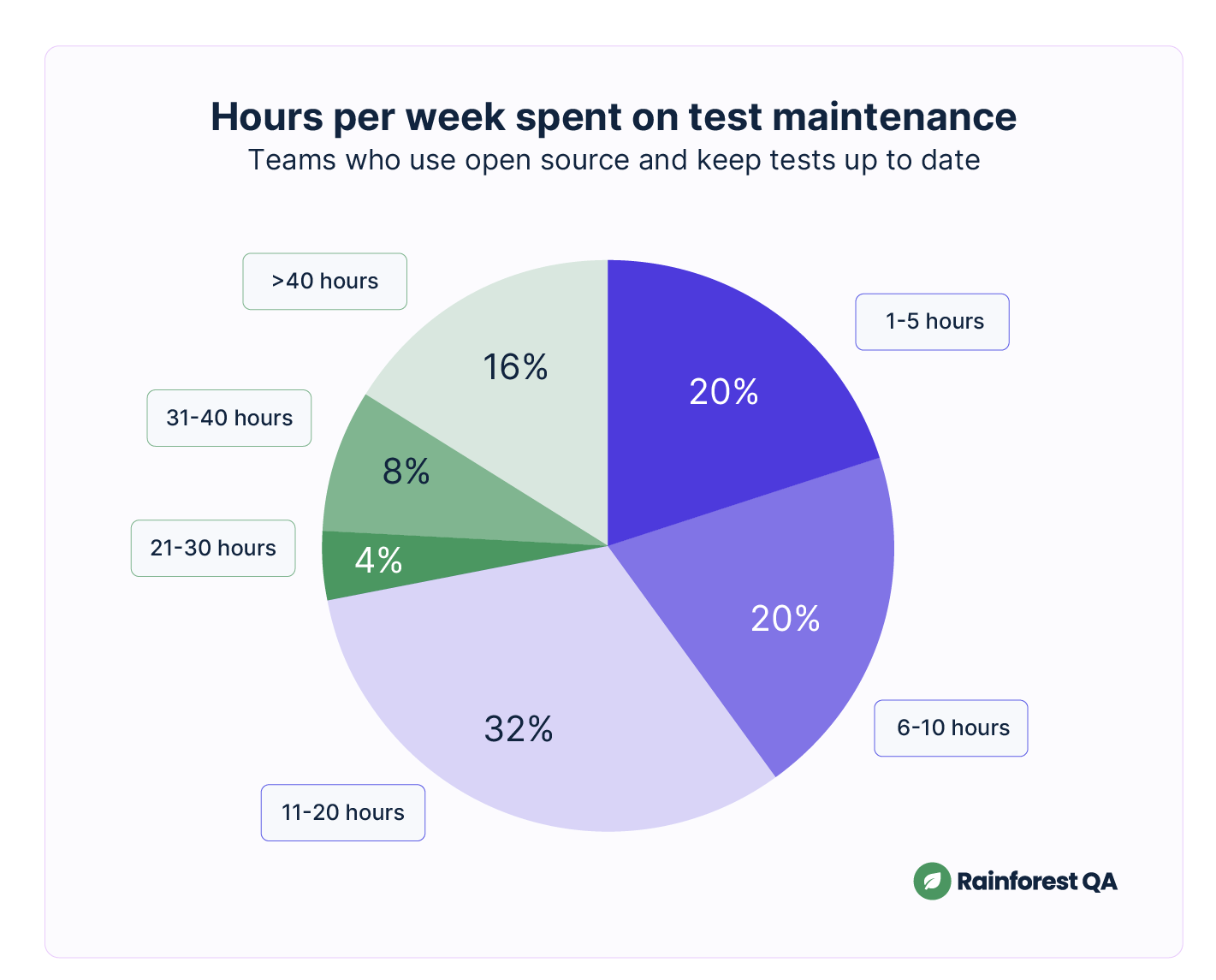

Of the ones who do manage to keep their test suites updated, 60 percent of them spend at least 11-20 hours per week on test maintenance.

No matter how you slice it, using open-source frameworks to automate your end-to-end tests is expensive and time-consuming.

And that’s not even considering the other costs involved with using open source frameworks, like having to provision and manage the machines on which to run your tests. Or the 3rd-party software that extends the functionality of these frameworks, giving you things like detailed test results, test management capabilities, or the ability to do visual (vs. DOM) validations.

You could consider outsourcing quality assurance engineering overseas to someplace affordable like India, but those engagements tend to be plagued by communication and service-quality issues.

If your product development team aims to ship fast and frequently, the best thing to do for your velocity is to adopt a test automation solution that won’t bog you down in maintenance.

Generally speaking, it’s easier and faster to create and update automated tests with no- and low-code tools than with open-source frameworks. But it’s important to note that “no-/low-code” doesn’t mean “intuitive” — some of these tools are more complex than others, which means they’re less easy to learn and use.

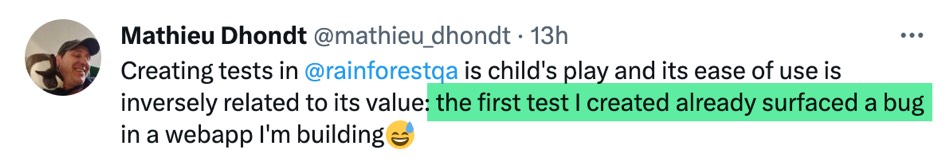

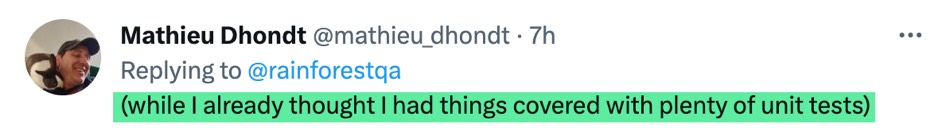

We’ve built the no-code Rainforest QA platform from the ground up to be so intuitive, anyone can jump in and create or maintain automated tests without any training. Even non-technical product managers and project managers can contribute.

Plus, we’ve deeply integrated generative AI in our test automation to help you avoid the otherwise time-consuming and annoying tasks involved with keeping an automated test suite up to date.

Using Rainforest AI, many of your tests can automatically “self-heal” when you make intended changes to your app. That means fewer false-positive test failures to investigate, and less interruptive test maintenance.

So, using the right no-code test automation tool is going to be a lot more time-efficient than having someone write and update automated tests in open source.

It’s going to be a lot more cost-effective than hiring a QA team of one or more engineers, too. For example, even if you add our automated testing services to a Rainforest plan to handle all test creation and maintenance for you, it’ll still cost less than half of hiring a full-time QA engineer.

And Rainforest includes everything you need — including test management, cloud-based machines on which to run your tests in parallel, and detailed test results including video recordings — so there won’t be any additional costs to getting your tests automated.

A test is only as good as its environment. Not putting enough investment into your QA environment can erode all the speed and quality benefits you thought automated testing would give you.

In short, your software testing environment needs to be production-like. “Prod-like” because you want the confidence that new code will work as expected in the environment where customers will ultimately interact with it, so it needs to perform like production.

Configuring your QA environment involves two main components: setting up the technology (including hardware, software, and other infrastructure like networking) and seeding test data.

Creating a QA testing environment that closely resembles prod is easier said than done. (Forget creating a perfect duplicate of prod in a testing environment, which is rarely practical, or even possible.) There’s always going to be a tradeoff between the fidelity of the environment’s resemblance to prod and the costs of creating that resemblance. You’re going to have to figure out the right balance of fidelity vs. cost for your team.

The most frequent issue we see is that teams underinvest in their testing environments to the point where automated tests consistently fail — and require time-consuming investigation — because the environments are so slow and unstable.

For example, imagine an automated test is looking for a particular element in your app’s UI, but the element doesn’t appear within the expected timeframe because the app is loading so slowly. That’s an avoidable test failure if you invest enough to get your QA environment to a “good enough” state of performance and stability.

The goal of seeding prod-like test data in your QA environment’s database is to create states in which tests complete as fast as possible and with the fewest possible steps. (Among other benefits we’ll address in the next section, having fewer steps means fewer potential points of false-positive test failures.)

For example, if you run a SaaS app like we do, there are lots of things you’ll want to test that require being logged into a (test) user account. You don’t want to have to include steps in every one of your tests that involve creating and/or logging into an account — each of those tests will take longer to run, and any issue with the account-creation flow in your app will break all your tests. It’s a more efficient approach to write (shorter) test cases for environment states in which realistic fake-user accounts have already been created and logged in.

When determining what test data to seed, consider the core roles and functions within your application.

Reset your QA environment’s database to a “clean” state each time before you run your test suite. This is a task ideal for automation in your CI/CD process, but even if you don’t use CI/CD, you can use a webhook in Rainforest to initiate the reset.

There are costs to doing too much, too quickly. In fact, you can save yourself a lot of grief by remembering that in many areas of test planning, less is more.

Keep each of your E2E tests as short as possible and make the scope of each one as limited as possible.

The latter part of that suggestion means you shouldn’t try to do more than necessary with any single test. For example, “log in,” “create a profile,” and “add friends” each represent a good scope for a test, whereas “log in then create a profile then add friends” is not a good scope.

Following this guidance means you’ll save time and money and be more effective:

When you start building out your suite of automated tests, start small. You’ll learn a lot in your early efforts about the best way to test certain scenarios and about the quirks of your testing environment. Better to learn these things before you create a bunch of tests you’d end up having to go back and update.

How small is “small”? Your first test plan milestone should be to create a smoke suite — which can be as small as five to ten tests — that covers the most important workflows in your app. Once your smoke suite and testing process are stable, expand to a regression suite. Eventually, you should get to the point where you should have test coverage for every (important) feature and user flow in your app.

To figure out which user workflows are the most important, you can ask yourself:

Once your team has covered the most important user workflows in your app with E2E tests, the value of adding more tests quickly hits diminishing returns.

That’s because — as we’ve already established — not all bugs are important, and bugs in unimportant workflows are likely to be unimportant bugs. If additional testing was “free,” that’d be less of an issue, but every additional test comes with maintenance costs, which teams new to test automation consistently underestimate.

So, your goal isn’t to have “100%” test coverage. Don’t create more tests than you can afford to maintain and only test workflows you’d fix right away if they broke.

Learn more about the Snowplow Strategy, our approach to prioritizing test coverage.

You won’t create a culture of quality — in which everyone on the team feels accountable for quality and consistently takes the appropriate steps to deliver it — overnight.

In the meantime (and in perpetuity), defining and enforcing policies that reinforce good habits is your best approach. After all, success is the product of daily habits. It helps to have an executive sponsor in engineering and/or product leadership who’s bought in to your policies and will enforce them.

For starters, here are some of the policies we’ve adopted and strongly endorse for other teams:

If you’re part of a modern tech startup generally following agile principles, you’re probably already practicing CI/CD.

If not, implementing a CI/CD pipeline is one of the best DevOps practices you can adopt to improve and maintain software quality.

Other than the metric you defined to measure your main goal, consider metrics that optimize for the behaviors you want to reinforce in your quality assurance processes.

For example, measuring time-to-test — the time it takes for your test suite to run — focuses your team on finding ways to keep the release process moving quickly, like keeping each of your tests as short as possible. Use your policies to counteract any unhealthy shortcuts, like skipping important tests during release.

Time-to-fix, or the time required to fix an issue once it’s been identified by a failed test, reminds everyone that improving and maintaining quality involves promptly addressing problems when they’re found.

Of course, there’s more nuance to each of the methodologies and guidelines we’ve set forth in this post — so much of developing an effective QA strategy is easier said than done.

Every Rainforest customer gets a Customer Service Manager (CSM) dedicated to their account — they’re QA specialists who have helped hundreds of tech startups develop their software QA strategies. As a consultative partner, your CSM will work with you to design QA processes and practices for your specific product quality initiatives. Talk to us to learn more.